AI as Simulacra

Why Generative AI Feels Wrong and What That Means For Us

I. Introduction

The profound impact of artificial intelligence, and particularly the meteoric rise of generative AI, is undeniable. The immense potential and poignant drawbacks have sparked intense debate. Large Language Models (LLMs) like ChatGPT offer anyone an instant consultant, brainstorming partner, translator, creative assistant, or even technical expertise. Similarly, image and video generation models accelerate concept creation and democratize graphic design. Yet, despite these clear advantages, a deep skepticism persists around the technology. This is, in part, due to the many well-founded ethical, economic, and political concerns. However, there exists a more fundamental aversion that lies beneath these practical objections: a core human preference for reality and resistance to simulation.

The fault of AI lies in its fundamental separation from reality, in its lack of experience, conscious intent, and human authorship. An AI-generated poem, though grammatically flawless, is not produced from a history of suffering, joy, or memories, but from statistical correlations in human language. An aesthetically pleasing AI image is not the result of an artist’s encounter with the world but of a system recombining patterns it can never experience firsthand. These models do not participate in reality, they operate on representations of it, generating new symbols from prior symbols. By simulating a reality they cannot inhabit, AI systems lack something fundamental to that reality. This loss exists in spite of, not because of, the technology’s practical shortcomings.

AI provides us with a distinct, modern example of the preference we have against simulation or toward reality. However, there exist many other areas of modern life, from consumer habits to interpersonal relationships, which can be similarly described as simulation. This article argues that the unease surrounding generative AI is analogous to a deeper human preference for reality, and that comparing AI within Baudrillard’s theory of simulacra shows why our scrutiny of this new technology must extend beyond AI systems to the many other forms of simulations that mediate everyday life.

II. Preference Toward Reality / Aversion to the Unreal:

The human inclination towards ‘the real’ is not unique to the domain of AI. This phenomenon is evident in our consumer habits, social attitudes, and is explored in works of popular fiction and philosophy. Food companies, for instance, use phrases like “made with real fruit” to distinguish their product from products that may use artificial flavors. There are strict rules that govern such labels because of the allure this ‘realness’ has on consumers. In Japan, for example, manufacturers cannot depict realistic fruit on their packaging unless the percentage of fruit juice is above a certain level. This illustrates that even the suggestion of a real fruit can meaningfully influence a buyer.

The diamond market isolates the quality of ‘realness’ from other desirable traits, like nutrition in the case of food items. Lab-grown diamonds typically fetch dramatically lower prices than similar natural ones, despite being chemically and structurally identical. What, then, gives the natural diamond its added value? In this case, it is nothing but the knowledge of its authenticity. There is no experiential difference to the unaided senses, only an understanding of the origin and that it formed through natural processes. This information does not manifest physically in any obvious way; it takes a trained eye and special tools to discern the difference. It is this perceived “realness,” that is grounded in knowledge rather than experience, that grants the natural diamond its elevated worth.

This preference for the real, even at the expense of other desires, is explored through major philosophical and fictional narratives. The Experience Machine is a thought experiment introduced by Robert Nozick in his 1974 book Anarchy, State, and Utopia.1 He imagines a machine that can allow humans to exist in a perfect and absolute state of pleasure. He then asks, if given the choice, would anyone willingly subject themselves to this machine? A similar idea is explored in Aldous Huxley’s book A Brave New World.2 In this novel, Huxley writes of a society that is kept perpetually content through a drug called soma. While the Experience Machine was originally an argument against hedonism and A Brave New World is a cautionary tale about the sacrifice of freedom, they both also confront the conflict between reality and simulation. On the surface, the simulated lives they offer are enticing, but the idea that this happiness is only a simulation must be grappled with. In both cases, the simulated existence is, by definition, more pleasurable than reality, yet something is still missing. When the choice is between perfect pleasure and the real world, many hesitate to abandon the real in favor of the simulation. We are drawn to whatever it is that the simulation lacks and would prefer a less pleasurable existence if it meant a real existence.

This idea of choice between reality and simulation is perfectly encapsulated in the 1999 film The Matrix.3 In this movie, the main character, Neo, discovers that the world he and all other humans inhabit is an elaborate computer simulation being run in their minds. The humans still exist in the real world as inert power sources for the machines which now dominate it. The Earth is depicted as a post-apocalyptic hellscape where the sun has been blocked out and the land is now covered in a mechanical network entirely hostile to human life. Despite this, when given the choice to return to blissful ignorance and live in the relatively pleasant simulation or escape into the brutal hell that is the real world, Neo chooses the real.

III. Baudrillard’s Hyperreal: The Precession of Simulacra

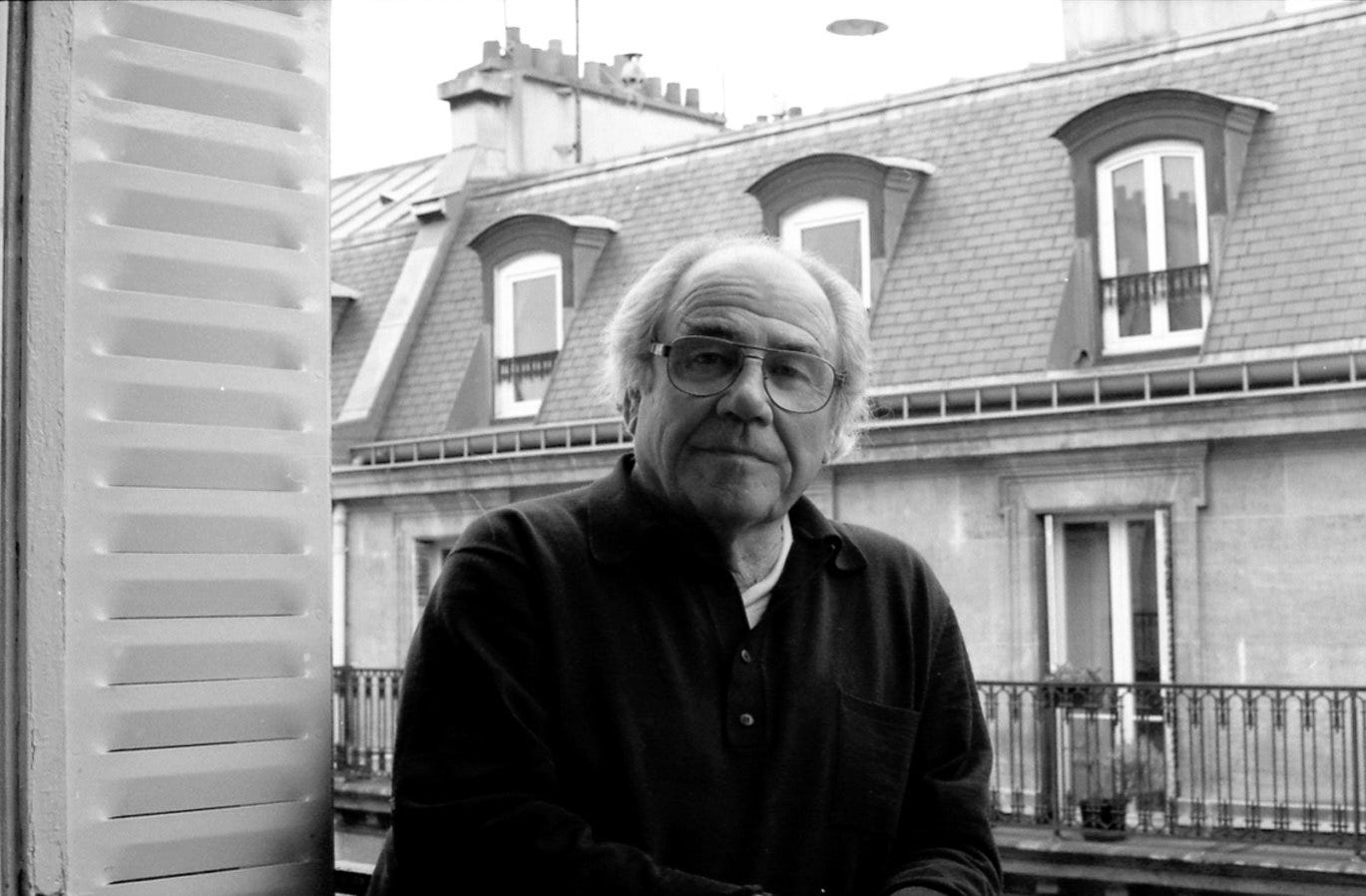

The Matrix was inspired by the book Simulacra and Simulation by Jean Baudrillard,4 a French sociologist and philosopher. Through this book and many of his other works, he explores the idea of reality and specifically how our modern world differs from it. He claims that the media-centric world we live in has become composed mostly, if not entirely, of simulations and that almost all of the signs and symbols we interact with are mere simulacra: copies of something which do not, or no longer, exist.

This idea stems from the fact that all media are, by nature, representational. When trying to capture or recreate a real event, the creator will inevitably have to make judgments or interpretations. A photo is always taken at a certain angle, a news story must always summarize details, and an author always has a bias. The creator, even when attempting to be genuine, can do no more than simulate their experience. In a media‑saturated society, these simulations increasingly mediate our contact with the world, so that our worldview is formed more by representations than by direct encounters. Over time, the media, symbols, and culture we create rest on layers of prior representations instead of on any stable underlying reality. For Baudrillard, this is the crucial shift. The copy no longer follows the real but precedes it, so that simulations come to define what counts as reality in the first place. In this state of “hyperreality,” the representations do not just reflect the world, they become more real for us than whatever they were supposed to depict.

Society is built upon these layers of interpretation. Our ideas, products, desires, and cultural norms result from this precession of simulating the simulated. In a modern sense, this can be seen through social media. A young person might build their personality and interests to imitate their favorite online influencer. The influencer, of course, carefully curates their online image to represent themselves exactly as they want, often driven by a desire to maximize engagement which itself is guided by recommendation algorithms. The young person imitates the distorted image of the real person, who themselves distorts their image to conform to algorithms driven by a simulation of the consumer’s desire. Each layer symbolizes the next. This, however, becomes the young person’s reality. Through this imitation, they have become a simulacrum, a copy of that which does not exist.

Baudrillard describes this precession of simulation into simulacra as consisting of four stages:

Copy (The Sacramental Order): A faithful symbol of the real.

Distortion (The Order of Malefice): A symbol that masks or interprets reality.

Deception (The Order of Sorcery): A symbol that conceals the absence of reality.

Pure Simulacra (The Order of Simulation): A symbol that refers to no reality at all.

He illustrates this idea through a version of ‘Borges’ Fable’ [4, p. 1-2] in which a mad cartographer is obsessed with making a map that is a perfect representation of a kingdom. This pursuit evolves to a point in which the map, laid over the land, conceals and eventually replaces the actual kingdom. A regular map is an example of the first stage of simulation. It is a copy of an original which is obviously a symbol and makes no attempt to hide this fact. The next stage comes when the cartographer builds a map that is so detailed it mimics the land almost exactly. Now, any accidental or purposeful differences may be taken as truth, allowing the map to begin to distort reality. The cartographer continues to build a map that is so ‘perfect’ it physically spans the entirety of the territory, point to point. The inhabitants now reside on top of the map as it is laid over the land and conceals the real world beneath it. They may begin to forget, or not realize, that the environment they interact with was once meant to be a copy of the real state. It now conceals the absence of the territory it was meant to symbolize. This is deception. Finally, we reach pure simulacra as the citizens, now living on and within the map, begin to create representations of the world they now inhabit. A map of this world is a symbol representing the cartographer’s creation, which itself was meant to be a copy of the real world that, for all intents and purposes, no longer exists as it has been buried and forgotten beneath its own recreation.

IV. AI as the Epitome of the Hyperreal

The precession of simulation to pure simulacra is compellingly analogous to the rise of generative AI models we see today. Simple AI programs can simulate ‘intelligence’ in useful ways without pretending to actually be intelligent. This is in stark contrast to how software like ChatGPT is designed to make you feel as though you are communicating with an intelligent partner. Within this enormous range of capabilities, we are able to distinctly pick out the characteristics of the four stages of simulation.

1. Copy

The term artificial intelligence is often conflated with machine learning when, in fact, artificial intelligence refers to the “use of technologies to build machines and computers that have the ability to mimic cognitive functions associated with human intelligence.”5 The key word here being ‘mimic’. Artificially intelligent systems complete tasks not necessarily by copying the mechanisms of natural intelligence, but by producing similar outcomes within a set of constraints. In this sense, simple AI algorithms are symbolic of human intelligence but by no means hide the fact that they are not truly intelligent. One example is the A* (pronounced A-star) search algorithm which is used to find the shortest path to a target. It is a relatively simple set of rules and heuristics that simulates an intelligent task (finding an optimal path) by following a straightforward set of instructions. It symbolizes an intelligent creature finding its way through a maze, but it would be difficult to mistake this for anything but a simulation.

2. Distortion

Machine learning refers to a subset of artificial intelligence which is “focused on algorithms that can ‘learn’ the patterns of training data” in order to “make decisions or predictions without [explicit instructions].”6 With machine learning algorithms, one can begin to see the distortion of what they are meant to represent. One of the simplest examples is the linear regression model. What this model does, in simple terms, is try to fit a trend line to a set of data points. It can then use this trend line to make predictions on new input it has not seen before. Even these simplest models distort reality in two ways. First, by their very name, they are described as learning. This allows us to anthropomorphize what is in reality a statistical model. It presents a level of familiarity that obscures the actual mechanism of the simulation. Second, they can never make perfect predictions, yet their results are taken and acted upon in many ways. Linear regression and other similar models are used in fields like business forecasting and insurance portfolios. Though they are very useful, these models create a slightly distorted image of the world and their predictions are then taken as representative of and used to influence that same world. In this sense, these simulations become real, and foreshadow the hyperreality of AI.

3. Deception

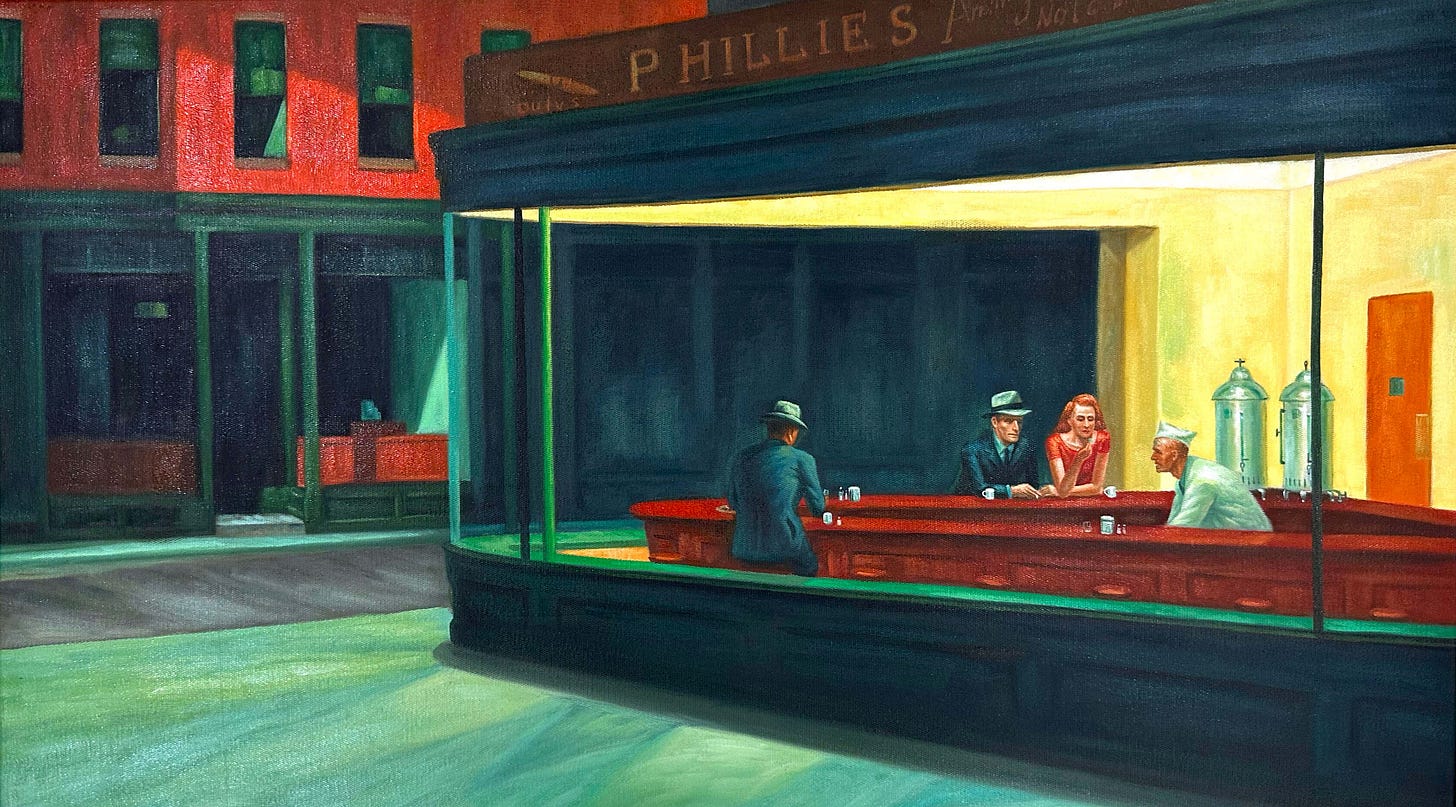

Modern AI algorithms are amazingly deceptive. The release of ChatGPT signified a dramatic shift in the power of AI to conceal the absence of reality. This is in part due to both technical advancements and the form factor in which it was released. The chat window that users most commonly interact with Large Language Models (LLMs) is specifically designed to mimic the experience of messaging another person. In other words, it is designed to conceal the fact that you are not. The conversational flow and complex responses create a powerful sense of an intelligent partner which masks the reality that there is not one. Non-text-based generative models are also becoming increasingly deceptive. This is evidenced by online communities like the ‘isThisAI’ subreddit, which is a forum dedicated to discussing “whether or not a picture, video or anything is AI-generated.”7 Apart from the ability to actually trick the viewer about its origin, AI-generated art and media are inherently deceptive. The inception of generative AI marks the first time in history that media does not, by default, imply an intentional creator. For the first time ever, the question “was this art created by someone?” is a relevant one. To whatever degree, it does have the capacity to conceal the lack of a real author. It can simulate the art and eliminate the artist; it can simulate the result and eliminate the origin; it can simulate the real and eliminate the real.

4. Pure Simulacra

The ultimate stage is reached when the replica refers to no reality at all. We currently find ourselves on the precipice of this era with generative AI. Despite how one might feel about it, AI permeates all corners of society and especially the internet. The “dead internet“ theory has gained more credence than ever, as the web becomes dominated by content that has lost its human referent.8 A major concern for the continued advancement of AI is data contamination or model collapse.9 Almost all of the accessible, original, human-created data has already been collected and used to train the current most powerful models. Therefore, future datasets used to train subsequent models will increasingly contain data that was generated by those models in the first place. This circular, self-referential training loop means that AI is no longer learning from human reality, but from a growing corpus of its own output. Even if the content is created by a human, it will become increasingly unlikely that that person has not been influenced by other AI sources (through AI-powered marketing, recommendation algorithms, or consuming AI-generated content). Therefore, even if we could filter out all of the data directly generated by AI, the next age of AI models will be trained on a body of data which represents a world already dominated by the influence of its predecessors. It will be, and has already become, a simulation feeding a simulation.

As AI continues to advance both technologically and in its influence, we will become just like the citizens living on the mad cartographer’s map. What was once supposed to simulate reality will become so fundamental that it will replace reality itself. In this stage, the resulting image, text, or piece of media is a symbol of nothing but the patterns within the data pool. It entirely lacks the original human referent, the artist’s inspiration, the genuine event, the maker’s conscious choice. Despite this, these algorithms continue to drive so many of the digital processes which pervade our lives. As we become more and more reliant on, and influenced by these simulations, they risk becoming reality itself. AI is transforming our environment into hyperreality. At this stage, AI is the culmination of Baudrillard’s ideas, transforming the original symbols of human expression (like art and text) into nothing more than symbols of a simulation of those things. This is pure simulacra: a copy that no longer references an original truth but rather the copy that preceded itself.

V. The Wider World of Simulacra

AI makes the judgment of non-reality easy because it is so obviously a simulation. We understand that, by its very nature, when we interact with AI we are interacting with something artificial. As we have seen, this elicits a loss, a movement away from what is typically desirable. However, we live in a world filled with simulation. Especially in the digital age, almost everything we see, interact with, learn from, and respond to can be seen as representative of something more ‘real’. The ways we communicate with each other, learn about the world, and navigate our consumerist society are all simulated experiences, yet they are so fundamental in our lives that it is often easy to forget this fact.

Take any text-based communication like email, instant messaging, and texting for example. Much, if not most, of our modern communication happens through these media. These forms of communication are ubiquitous in our daily lives, yet they are simulations of ‘real’ conversation. As with all simulations, aspects of the real experience are lost. You cannot read facial expressions or hear tone through text, and you miss numerous other subtle cues present in a face-to-face interaction. Yet, this form of communication is so omnipresent that we hardly ever consider this difference. The personal interactions which would have been necessary for typically daily tasks, like conducting business or catching up with loved ones, are simulated through the rapid transfer of text. On the surface, this is excellent, allowing for more efficient business and frequent connection with friends and family. However, in practice, we see that the convenience of this simulation allows it to replace its real counterpart. Business has less of a need for in-person meetings, and we may feel less of a desire to meet up with a friend if we have been texting them all week long. While this shift is not necessarily negative, it is a considerable change to our society that is driven by a simulation. It is, therefore, worth considering.

Other parts of our daily life are similarly mediated. Consider the frictionless entertainment ecosystem created by recommendation algorithms, or the pervasive nature of targeted marketing and advertising, where we, as individuals and as part of broader consumer trends, are simulated and then shown a reflection of that simulation. Media companies, whether news outlets or social platforms, profit by maximizing engagement rather than accuracy or depth. As the saying goes, in many of these environments we are not the customer but the product, offered as bits of attention to advertisers. In this attention economy, the primary goal is not to inform or enrich us but to hold our gaze for as long as possible. Companies build detailed models of our behavior, what we click on, pause over, or share, and then feed that simulated version of “us” back as an endless stream of tailored content. What may feel like frictionless convenience is really the removal of choice and surprise. Instead of searching, browsing, or risking boredom, we are continuously presented with whatever is most likely to keep us engaged. Over time, this has a profound effect on both ourselves and our culture. Initially, these systems approximate what we already like, but eventually the recommendations narrow and stabilize around whatever proves most effective at capturing our attention. What we consume because it is pushed to us gradually becomes what we experience as our genuine interests. In Baudrillard’s terms, the algorithmic profile of our desire and attention begins to precede the real, defining what we encounter and, in turn, who we become. The simulation of our attention does not merely reflect our wants; it trains them, until the hyperreal feed stands in for an open-ended, self-directed engagement with the world.

This phenomenon is not contained to the 20th century. One of Baudrillard’s famous articles, The Gulf War Did Not Take Place,10 illustrates that hyperreality can stem from much simpler forms of media. While he does not deny that this conflict occurred, he asks whether the events of what has been called The Gulf War unfolded exactly as they were presented. For the typical western audience, this conflict did not take place before their eyes. They did not witness the undoubted violence and tragedies, nor did they listen in on the countless meetings between leaders. They did not hear the bombs, smell the burning oil, or feel the fear. Rather, they read about these things in the newspaper, listened to the stories on the radio, and watched reporters explain what was going on. These representations of the conflict may or may not have been created with the intent of accuracy and information but are still just that: representations, symbols of the actual events translated into various forms of media. This is what Baudrillard means when he said this war did not happen. What we understand to be the Gulf War is what we learn and interpret from these representations of the events. Our experience is that which is created in our mind while reading words on a page or listening to someone else’s abridged explanation. These experiences shape our understanding of the world, and in this way, these simulations become our reality.

The point Baudrillard emphasizes is that we interact with simulacra every day, all of the time. He thought this in 1981, and since then, the progression of technology, the internet, and now AI seems to almost caricature his ideas. As a society, we increasingly interact with our fundamental world through simulations of what one might consider real. We work remotely through computers and interact with our coworkers and bosses through text for money that will most likely never have a physical form, all to buy things which make us feel like the idealized and curated images of people we see on social media. These layers of representation have become so ubiquitous that we do not even realize they are there. Through slow integration, they have become our reality.

VI. Return to the Real: Making a Choice

We currently stand at a unique crossroads of technological advancement, marked by the rapid rise of generative AI, and find ourselves confronted with profound questions about reality and its simulation. AI is more than a mere productivity tool, it is a showcase of our deep, collective unease about the loss of authenticity in an increasingly mediated world. The layers of simulation that we interact with whether through the lens of AI, social media, or even our everyday consumer choices, are not new. Humans have been creating representations to symbolize their world for all of recorded history. However, the stakes are higher now, as the simulations of today are more complex, pervasive, and influential than ever before.

In this moment of rapid change, we are faced with a fundamental question, what is lost when we substitute the real for the simulated? The appeal of generative AI lies in its ability to replicate human productivity and creativity, but in doing so, it strips away a vital essence of the final result. This loss is not unique to AI, but rather makes visible a broader condition of the world we are creating, one in which symbols increasingly replace the very substance they were meant to represent. In this sense, Baudrillard’s concept of hyperreality is no longer an abstract philosophical idea, it has become our everyday reality. What were once tools for representation have increasingly become the dominant framework through which we understand and interact with the world.

The discomfort we feel toward AI, then, is not solely a reaction to the technology itself, but an expression of a deeper anxiety about what is being lost through this shift. As Baudrillard warns, when simulations replace reality, we risk losing touch with the fundamental human truths that ground us: authentic connections, genuine creativity, the richness of lived experience, and even truth itself. For this reason, the same skepticism that we reserve for AI should be applied to the countless other forms of simulation that permeate our lives. Whether it’s through the curated images on social media, the algorithms shaping our wants and desires, or even the way we communicate through text rather than speaking to one another, we are continuously navigating a world that blurs the line between the real and the artificial.

In this age of hyperreality, the challenge is not to reject the digital world or the conveniences it offers but to cultivate a deeper awareness of what is being lost as we immerse ourselves in these simulations. Just as we scrutinize AI for its lack of authenticity, we must also question the other layers of representation that dominate our lives. The conversation about AI is not just about technology, it is a philosophical inquiry into the nature of reality itself. It allows us to consider what it means to be human, what makes art genuine, and what is lost through simulation. This technology has not only delivered the efficiencies advertised on the surface, but has also handed us a powerful lens for noticing which aspects of life we would rather keep real. It opens a window onto the other simulations that shape our world, from mediated relationships to algorithmically curated desires. Are we, like the inhabitants of the cartographer’s map, losing sight of the real world beneath the simulacra, or can we find a way to preserve the essence of reality while embracing the benefits of a digitally augmented existence? If we can cultivate this awareness and practice making these distinctions, we can gain the freedom to decide what we allow to fade into simulation and what must remain grounded in reality, rather than sitting back and quietly letting those decisions be made for us.

Mikail Krochta holds a degree in Computer Science from North Carolina State University and minored in Mathematics. He is passionate about life long learning, philosophy, social responsibility, and a commitment to life beyond a 9 to 5. You can often find him playing board games, exploring used book stores, and arguing about philosophy with his fiance.

Nozick, R. (1974). Anarchy, State, and Utopia. Basic Books.

Huxley, A. (1932). Brave New World. Chatto & Windus.

Wachowski, L., & Wachowski, L. (Directors). (1999). The Matrix [Film]. Warner Bros. Pictures.

Baudrillard, J. (1994). Simulacra and Simulation (S. F. Glaser, Trans.). University of Michigan Press. (Original work published 1981).

Definition and differences between Artificial Intelligence (AI) and Machine Learning (ML). (n.d.). IBM. Retrieved December 1, 2025, from https://www.ibm.com/think/topics/machine-learning

Ibid.

r/IsThisAI. (n.d.). Reddit. Retrieved December 1, 2025, from https://www.reddit.com/r/IsThisAI/

Muzumdar, P., Cheemalapati, S., RamiReddy, S. R., Singh, K., Kurian, G., & Muley, A. (2025). The Dead Internet Theory: A Survey on Artificial Interactions and the Future of Social Media. Asian Journal of Research in Computer Science, 18(1), 67–73. https://doi.org/10.9734/ajrcos/2025/v18i1549

Seddik, M. E. A., Chen, S.-W., Hayou, S., & Youssef, P. (2024). How Bad is Training on Synthetic Data? A Statistical Analysis of Language Model Collapse. arXiv preprint arXiv:2404.05090. Retrieved from https://arxiv.org/abs/2404.05090

Baudrillard, J. (1995). The Gulf War Did Not Take Place (P. Patton, Trans.). Indiana University Press. (Original work published 1991).